1) What is a Knowledge Module?

Knowledge Modules (KMs) are code templates. Each KM is dedicated to an individual task in the overall data integration process. The code in the KMs appears in nearly the form that it will be executed except that it includes Oracle Data Integrator (ODI) substitution methods enabling it to be used generically by many different integration jobs. The code that is generated and executed is derived from the declarative rules and metadata defined in the ODI Designer module.

The RKM role is to perform customized reverse engineering for a model. The RKM is in charge of connecting to the application or meta-data provider then transforming and writing the resulting meta-data into Oracle Data Integration's repository. The meta-data is written temporarily into the SNP_REV_xx tables. The RKM then calls the Oracle Data Integrator API to read from these tables and write to Oracle Data Integrators meta-data tables of the work repository in incremental update mode. This is illustrated below

A typical RKM follows these steps:

a).Cleans up the SNP_REV_xx tables from previous executions using the OdiReverseResetTable tool.

b). Retrieves sub models, datastores, columns, unique keys, foreign keys, conditions from the metadata provider to SNP_REV_SUB_MODEL, SNP_REV_TABLE,SNP_REV_COL, SNP_REV_KEY,SNP_REV_KEY_COL, SNP_REV_JOIN, SNP_REV_JOIN_COL, SNP_REV_COND tables.

C). Updates the model in the work repository by calling the OdiReverseSetMetaData tool.

Available RKM in ODI 11.1.1

1) RKM DB2/400

2) RKM FILE (FROM EXCEL)

3) RKM HYPERION ESSBASE

4) RKM FINANCIAL MANAGEMENT

5) RKM HYPERION PLANNING

6) RKM INFORMIX

7) RKM INFORMIX SE

8) RKM MSSQL

9) RKM ORACLE

10) RKM ORACLE (JYOTHON)

11) RKM ORACLE DATA QUALITY

12) RKM ORACLE OLAP (JYOTHON)

13) RKM SQL (JYOTHON)

14) RKM TERADATA

The CKM accepts a set of constraints and the name of the table to check. It creates an "E$" error table which it writes all the rejected records to. The CKM can also remove the erroneous records from the checked result set.

The following figures show how a CKM operates in both STATIC_CONTROL and FLOW_CONTROL modes.

a).Cleans up the SNP_REV_xx tables from previous executions using the OdiReverseResetTable tool.

b). Retrieves sub models, datastores, columns, unique keys, foreign keys, conditions from the metadata provider to SNP_REV_SUB_MODEL, SNP_REV_TABLE,SNP_REV_COL, SNP_REV_KEY,SNP_REV_KEY_COL, SNP_REV_JOIN, SNP_REV_JOIN_COL, SNP_REV_COND tables.

C). Updates the model in the work repository by calling the OdiReverseSetMetaData tool.

Available RKM in ODI 11.1.1

1) RKM DB2/400

2) RKM FILE (FROM EXCEL)

3) RKM HYPERION ESSBASE

4) RKM FINANCIAL MANAGEMENT

5) RKM HYPERION PLANNING

6) RKM INFORMIX

7) RKM INFORMIX SE

8) RKM MSSQL

9) RKM ORACLE

10) RKM ORACLE (JYOTHON)

11) RKM ORACLE DATA QUALITY

12) RKM ORACLE OLAP (JYOTHON)

13) RKM SQL (JYOTHON)

14) RKM TERADATA

The CKM accepts a set of constraints and the name of the table to check. It creates an "E$" error table which it writes all the rejected records to. The CKM can also remove the erroneous records from the checked result set.

The following figures show how a CKM operates in both STATIC_CONTROL and FLOW_CONTROL modes.

2.2) Check Knowledge Modules (CKM)

The CKM is in charge of checking that records of a data set are consistent with defined constraints. The CKM is used to maintain data integrity and participates in the overall data quality initiative. The CKM can be used in 2 ways:

a) To check the consistency of existing data. This can be done on any datastore or within interfaces, by setting the STATIC_CONTROL option to "Yes". In the first case, the data checked is the data currently in the datastore. In the second case, data in the target datastore is checked after it is loaded.

b) To check consistency of the incoming data before loading the records to a target datastore. This is done by using the FLOW_CONTROL option. In this case, the CKM simulates the constraints of the target datastore on the resulting flow prior to writing to the target

The CKM is in charge of checking that records of a data set are consistent with defined constraints. The CKM is used to maintain data integrity and participates in the overall data quality initiative. The CKM can be used in 2 ways:

a) To check the consistency of existing data. This can be done on any datastore or within interfaces, by setting the STATIC_CONTROL option to "Yes". In the first case, the data checked is the data currently in the datastore. In the second case, data in the target datastore is checked after it is loaded.

b) To check consistency of the incoming data before loading the records to a target datastore. This is done by using the FLOW_CONTROL option. In this case, the CKM simulates the constraints of the target datastore on the resulting flow prior to writing to the target

(STATIC_CONTROL)

(FLOW_CONTROL)

In FLOW_CONTROL mode, the CKM reads the constraints of the target table of the Interface. It checks these constraints against the data contained in the "I$" flow table of the staging area. Records that violate these constraints are written to the "E$" table of the staging area.

In both cases, a CKM usually performs the following tasks:

In both cases, a CKM usually performs the following tasks:

a) Create the "E$" error table on the staging area. The error table should contain the same columns as the datastore as well as additional columns to trace error messages, check origin, check date etc.

b) Isolate the erroneous records in the "E$" table for each primary key, alternate key, foreign key, condition, mandatory column that needs to be checked.

c) If required, remove erroneous records from the table that has been checked

b) Isolate the erroneous records in the "E$" table for each primary key, alternate key, foreign key, condition, mandatory column that needs to be checked.

c) If required, remove erroneous records from the table that has been checked

Available CKM in ODI 11.1.1

a) CKM HSQL

b) CKM NETEZZA

c) CKM ORACLE

d) CKM SQL

e) CKM SYBASE IQ

f) CKM TERADATA

2.3) Loading Knowledge Modules (LKM)

An LKM is in charge of loading source data from a remote server to the staging area. It is used by interfaces when some of the source datastores are not on the same data server as the staging area. The LKM implements the declarative rules that need to be executed on the source server and retrieves a single result set that it stores in a "C$" table in the staging area, as illustrated below.

An LKM is in charge of loading source data from a remote server to the staging area. It is used by interfaces when some of the source datastores are not on the same data server as the staging area. The LKM implements the declarative rules that need to be executed on the source server and retrieves a single result set that it stores in a "C$" table in the staging area, as illustrated below.

The LKM creates the "C$" temporary table in the staging area. This table will hold records loaded from the source server

a) The LKM obtains a set of pre-transformed records from the source server by executing the appropriate transformations on the source. Usually, this is done by a single SQL SELECT query when the source server is an RDBMS. When the source doesn't have SQL capacities (such as flat files or applications), the LKM simply reads the source data with the appropriate method (read file or execute API).

b) The LKM loads the records into the "C$" table of the staging area.

An interface may require several LKMs when it uses datastores from different sources. When all source datastores are on the same data server as the staging area, no LKM is required.

a) The LKM obtains a set of pre-transformed records from the source server by executing the appropriate transformations on the source. Usually, this is done by a single SQL SELECT query when the source server is an RDBMS. When the source doesn't have SQL capacities (such as flat files or applications), the LKM simply reads the source data with the appropriate method (read file or execute API).

b) The LKM loads the records into the "C$" table of the staging area.

An interface may require several LKMs when it uses datastores from different sources. When all source datastores are on the same data server as the staging area, no LKM is required.

Available LKM in ODI 11.1.1

1) LKM ATUNITY TO SQL

2) LKM DB2 400 JOURNAL TO SQL

3) LKM DB2 400 JOURNAL TO DB2 400

4) LKM DB2 UDB to DB2 UDB (EXPORT_IMPORT)

5) LKM File to DB2 UDB (LOAD)

6) LKM File to MSSQL (BULK)

7) LKM File to Netezza (EXTERNAL TABLE)

8) LKM File to Netezza (NZLOAD)

9) LKM File to Oracle (EXTERNAL TABLE)

10) LKM File to Oracle (SQLLDR)

11) LKM File to SQL

12) LKM File to Sybase IQ (LOAD TABLE)

13) LKM File to Teradata (TTU)

14) LKM Hyperion Essbase DATA to SQL

15) LKM Hyperion Essbase METADATA to SQL

16) LKM Hyperion Financial Management Data to SQL

17) LKM Hyperion Financial Management Members To SQL

18) LKM Informix to Informix (SAME SERVER)

19) LKM JMS to SQL

20) LKM JMS XML to SQL

21) LKM MSSQL to MSSQL (BCP)

22) LKM MSSQL to MSSQL (LINKED SERVERS)

23) LKM MSSQL to ORACLE (BCP SQLLDR)

24) LKM MSSQL to SQL (ESB XREF)

25) LKM Oracle BI to Oracle (DBLINK)

26) LKM Oracle BI to SQL

27) LKM Oracle to Oracle (data pump)

28) LKM Oracle to Oracle (DBLINK)

29) LKM SQL to DB2 400 (CPYFRMIMPF)

30) LKM SQL to DB2 UDB

31) LKM SQL to DB2 UDB (LOAD)

32) LKM SQL to MSSQL

33) LKM SQL to MSSQL (BULK)

34) LKM SQL to Oracle

35) LKM SQL to SQL

36) LKM SQL to SQL (ESB XREF)

37) LKM SQL to SQL (JYTHON)

38) LKM SQL to SQL (row by row)

39) LKM SQL to Sybase ASE

40) LKM SQL to Sybase ASE (BCP)

41) LKM SQL to Sybase IQ (LOAD TABLE)

42) LKM SQL to Teradata (TTU)

43) LKM Sybase ASE to Sybase ASE (BCP)

1) LKM ATUNITY TO SQL

2) LKM DB2 400 JOURNAL TO SQL

3) LKM DB2 400 JOURNAL TO DB2 400

4) LKM DB2 UDB to DB2 UDB (EXPORT_IMPORT)

5) LKM File to DB2 UDB (LOAD)

6) LKM File to MSSQL (BULK)

7) LKM File to Netezza (EXTERNAL TABLE)

8) LKM File to Netezza (NZLOAD)

9) LKM File to Oracle (EXTERNAL TABLE)

10) LKM File to Oracle (SQLLDR)

11) LKM File to SQL

12) LKM File to Sybase IQ (LOAD TABLE)

13) LKM File to Teradata (TTU)

14) LKM Hyperion Essbase DATA to SQL

15) LKM Hyperion Essbase METADATA to SQL

16) LKM Hyperion Financial Management Data to SQL

17) LKM Hyperion Financial Management Members To SQL

18) LKM Informix to Informix (SAME SERVER)

19) LKM JMS to SQL

20) LKM JMS XML to SQL

21) LKM MSSQL to MSSQL (BCP)

22) LKM MSSQL to MSSQL (LINKED SERVERS)

23) LKM MSSQL to ORACLE (BCP SQLLDR)

24) LKM MSSQL to SQL (ESB XREF)

25) LKM Oracle BI to Oracle (DBLINK)

26) LKM Oracle BI to SQL

27) LKM Oracle to Oracle (data pump)

28) LKM Oracle to Oracle (DBLINK)

29) LKM SQL to DB2 400 (CPYFRMIMPF)

30) LKM SQL to DB2 UDB

31) LKM SQL to DB2 UDB (LOAD)

32) LKM SQL to MSSQL

33) LKM SQL to MSSQL (BULK)

34) LKM SQL to Oracle

35) LKM SQL to SQL

36) LKM SQL to SQL (ESB XREF)

37) LKM SQL to SQL (JYTHON)

38) LKM SQL to SQL (row by row)

39) LKM SQL to Sybase ASE

40) LKM SQL to Sybase ASE (BCP)

41) LKM SQL to Sybase IQ (LOAD TABLE)

42) LKM SQL to Teradata (TTU)

43) LKM Sybase ASE to Sybase ASE (BCP)

2.4) Integration Knowledge Modules (IKM)

The IKM is in charge of writing the final, transformed data to the target table. Every interface uses a single IKM. When the IKM is started, it assumes that all loading phases for the remote servers have already carried out their tasks. This means that all remote source data sets have been loaded by LKMs into "C$" temporary tables in the staging area, or the source datastores are on the same data server as the staging area.

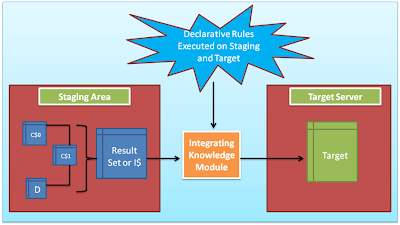

Therefore, the IKM simply needs to execute the "Staging and Target" transformations, joins and filters on the "C$" tables, and tables located on the same data server as the staging area. The resulting set is usually processed by the IKM and written into the "I$" temporary table before loading it to the target. These final transformed records can be written in several ways depending on the IKM selected in your interface. They may be simply appended to the target, or compared for incremental updates or for slowly changing dimensions. There are 2 types of IKMs: those that assume that the staging area is on the same server as the target datastore, and those that can be used when it is not. These are illustrated below:

(Staging Area on Target)

When the staging area is on the target server, the IKM usually follows these steps:

a) The IKM executes a single set-oriented SELECT statement to carry out staging area and target declarative rules on all "C$" tables and local tables (such as D in the figure). This generates a result set.

b) Simple "append" IKMs directly write this result set into the target table. More complex IKMs create an "I$" table to store this result set.

c) If the data flow needs to be checked against target constraints, the IKM calls a CKM to isolate erroneous records and cleanse the "I$" table.

d) The IKM writes records from the "I$" table to the target following the defined strategy (incremental update, slowly changing dimension, etc.).

e) The IKM drops the "I$" temporary table.

f) Optionally, the IKM can call the CKM again to check the consistency of the target datastore.

These types of KMs do not manipulate data outside of the target server. Data processing is set-oriented for maximum efficiency when performing jobs on large volumes.

a) The IKM executes a single set-oriented SELECT statement to carry out staging area and target declarative rules on all "C$" tables and local tables (such as D in the figure). This generates a result set.

b) Simple "append" IKMs directly write this result set into the target table. More complex IKMs create an "I$" table to store this result set.

c) If the data flow needs to be checked against target constraints, the IKM calls a CKM to isolate erroneous records and cleanse the "I$" table.

d) The IKM writes records from the "I$" table to the target following the defined strategy (incremental update, slowly changing dimension, etc.).

e) The IKM drops the "I$" temporary table.

f) Optionally, the IKM can call the CKM again to check the consistency of the target datastore.

These types of KMs do not manipulate data outside of the target server. Data processing is set-oriented for maximum efficiency when performing jobs on large volumes.

(Staging Area Different from Target)

a) The IKM executes a single set-oriented SELECT statement to carry out declarative rules on all "C$" tables and tables located on the staging area (such as D in the figure). This generates a result set.

b) The IKM loads this result set into the target datastore, following the defined strategy (append or incremental update).

This architecture has certain limitations, such as:

A CKM cannot be used to perform a data integrity audit on the data being processed.

Data needs to be extracted from the staging area before being loaded to the target, which may lead to performance issues.

Available IKM in ODI 11.1.1

1) IKM Access Incremental Update

2) IKM DB2 400 Incremental Update

3) IKM DB2 400 Incremental Update (CPYF)

4) IKM DB2 400 Slowly Changing Dimension

5) IKM DB2 UDB Incremental Update

6) IKM DB2 UDB Slowly Changing Dimension

7) IKM File to Teradata (TTU)

8) IKM Informix Incremental Update

9) IKM MSSQL Incremental Update

10) IKM MSSQL Slowly Changing Dimension

11) IKM Netezza Control Append

12) IKM Netezza Incremental Update

13) IKM Netezza To File (EXTERNAL TABLE)

14) IKM Oracle AW Incremental Update

15) IKM Oracle BI to SQL Append

16) IKM Oracle Incremental Update

17) IKM Oracle Incremental Update (MERGE)

18) IKM Oracle Incremental Update (PL SQL)

19) IKM Oracle Multi Table Insert

20) IKM Oracle Slowly Changing Dimension

21) IKM Oracle Spatial Incremental Update

22) IKM SQL Control Append

23) IKM SQL Control Append (ESB XREF)

24) IKM SQL Incremental Update

25) IKM SQL Incremental Update (row by row)

26) IKM SQL to File Append

27) IKM SQL to Hyperion Essbase (DATA)

28) IKM SQL to Hyperion Essbase (METADATA)

29) IKM SQL to Hyperion Financial Management Data

30) IKM SQL to Hyperion Financial Management Dimension

31) IKM SQL to Hyperion Planning

32) IKM SQL to JMS Append

33) IKM SQL to JMS XML Append

34) IKM SQL to SQL Append

35) IKM SQL to Teradata (TTU)

36) IKM Sybase ASE Incremental Update

37) IKM Sybase ASE Slowly Changing Dimension

38) IKM Sybase IQ Incremental Update

39) IKM Sybase IQ Slowly Changing Dimension

40) IKM Teradata Control Append

41) IKM Teradata Incremental Update

42) IKM Teradata Multi Statement

43) IKM Teradata Slowly Changing Dimension

44) IKM Teradata to File (TTU)

45) IKM XML Control Append

2.5) Journalizing Knowledge Modules (JKM)

JKMs create the infrastructure for Change Data Capture on a model, a sub model or a datastore. JKMs are not used in interfaces, but rather within a model to define how the CDC infrastructure is initialized. This infrastructure is composed of a subscribers table, a table of changes, views on this table and one or more triggers or log capture programs as illustrated below.

Available JKM in ODI 11.1.1

1) JKM DB2 400 Consistent

2) JKM DB2 400 Simple

3) JKM DB2 400 Simple (Journal)

4) JKM DB2 UDB Consistent

5) JKM DB2 UDB Simple

6) JKM HSQL Consistent

7) JKM HSQL Simple

8) JKM Informix Consistent

9) JKM Informix Simple

10) JKM MSSQL Consistent

11) JKM MSSQL Simple

12) JKM Oracle 10g Consistent (Streams)

13) JKM Oracle 11g Consistent (Streams)

14) JKM Oracle Consistent

15) JKM Oracle Consistent (Update Date)

16) JKM Oracle Simple

17) JKM Oracle to Oracle Consistent (OGG)

18) JKM Sybase ASE Consistent

19) JKM Sybase ASE Simple

2.6) Service Knowledge Modules (SKM)

SKMs are in charge of creating and deploying data manipulation Web Services to your Service Oriented Architecture (SOA) infrastructure. SKMs are set on a Model. They define the different operations to generate for each datastores web service. Unlike other KMs, SKMs do no generate an executable code but rather the Web Services deployment archive files. SKMs are designed to generate Java code using Oracle Data Integrator's framework for Web Services. The code is then compiled and eventually deployed on the Application Server's containers.

Available SKM in ODI 11.1.1

1) SKM HSQL

2) SKM IBM UDB

3) SKM Informix

4) SKM Oracle

Hello, Can you please provide a simple example on how to use LKM SQL to MSSQL (BULK). How is it different from LKM SQL to ORACLE? In what scenarios will this LKM be used?

ReplyDelete